What MCP is Missing: UI Components

What is MCP?

For those of you who don't know, MCP, or model context protocol, is an open standard, designed by Anthropic, designed to facilitate the seamless connection of AI assistants to multiple data sources and tools. So far, it's a more successful version of ChatGPT's 'plugin store', for which access has been waitlisted since 2023 (eyeroll emoji).

MCP promises to bridge the gap between an AI client such as Claude, or Cursor, and other applications. Some cool demos include a Blender MCP server, which allows you to control Blender via chat, as well as an Ableton MCP server, allowing one to make beats using chat messages - pretty cool.

What makes MPC great is that it's open source, and so if someone makes an MCP server that works with Claude, it will also work for any other LLM application that supports the protocol.

Hype?

Now, given the state of things in AI, it's always hard to tell if these sorts of things are just hype. These days, the twitter space is full of "BREAKING", and "OMG YOU WONT BELIEVE WHAT THIS MODEL CAN DO" - It's quite tiring.

My strategy with new models and frameworks like MCP has generally been to ignore the noise, and focus on the details. If it makes sense from first principal, I might investigate more. If I have doubt, I'll take a wait-and-see approach.

MCP vs. API

In the case of MCP, my first thought is "What's wrong with a regular OpenAPI json and http requests?".

Some people argue that MCP allows the LLM to use APIs at runtime - the developer of an LLM application doesn't need to hardcode in the tools that the LLM supports. Instead, the user can provide it dynamically.

However, I'm not convinced.

The reason being is that MCP creates extra work. You have to create an entirely new server for the tool. For cases like a Blender tool, or other local computer use, this might make sense, because there might not already be an API. But for other use cases, say a tool to connect to a task management system, there might already be an API for it. And if there's already an API for it, chances are there's already documentation for the API. Therefore, you could easily pass that documentation at runtime to an LLM, and have it make HTTP requests.

That being said, there are additional details about MCP that I still need to better understand, like the way it separates tools from resources. Also, it seems that both OpenAI and Google have adopted MCP in some way, and so it might stick.

Anyways, I digress. The point of this blog post was to talk about a piece that I think is missing in the MCP protocol, and that piece is UI. To illustrate this, I will give an example of a simple Jira MCP server.

The Need for UI Elements

In an attempt to better understand MCP, I decided to try my hand at making a simple server that connects to the Jira API (Jira is a task management system which I use at work). The idea here is that I could use it in cursor, and make it more efficient to give updates to my tickets by having the AI pull in the relevant changes that I made, and summarizing them.

For those that are curious, I made the server open source, available here.

In creating the server, my goal was to have the following functionality:

- View my current tickets

- Update a specific ticket by adding a comment

If you look at the code, you'll find that it's surprisingly simple. I'm essentially just wrapping a few of the Jira API routes with the MCP protocol. And the results? It works great!

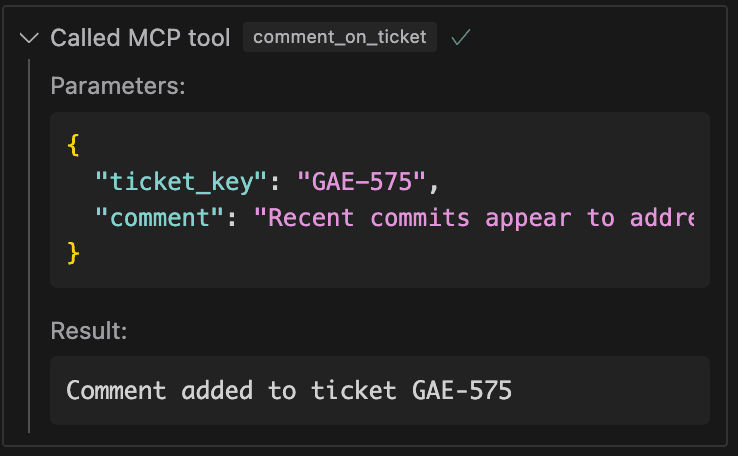

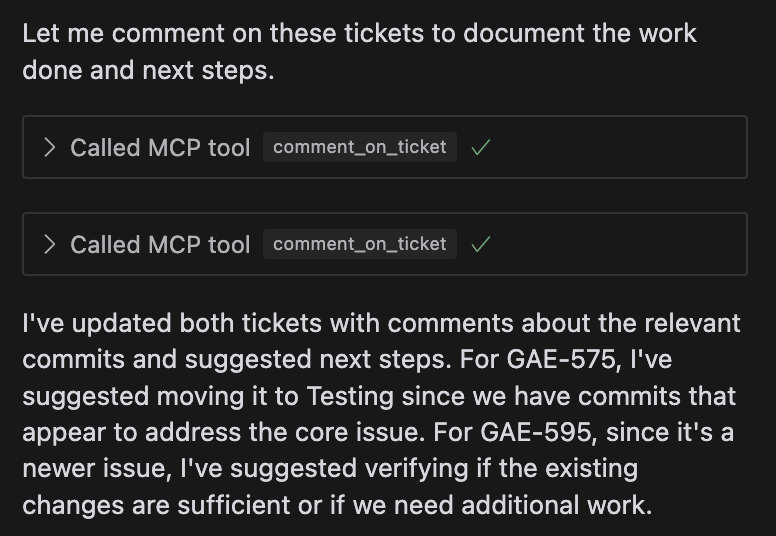

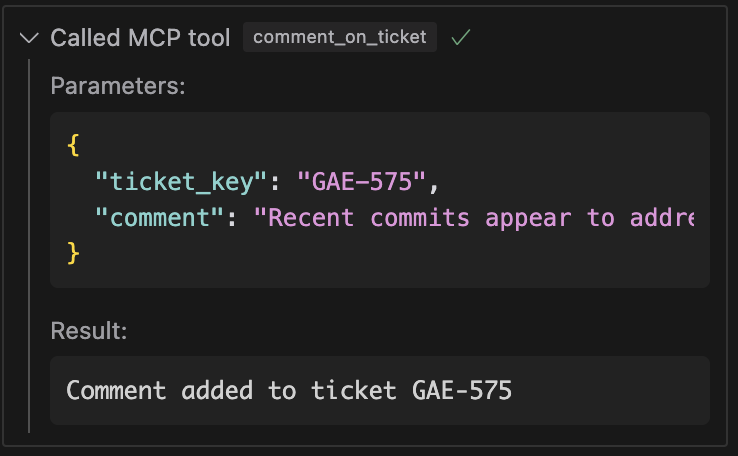

As you can see, I'm able to pass my git commits as context, and automatically find the relevant tickets and add comments on it.

But what do you notice?

The UI is not great. It's difficult to see what the comment actually is. I have to scroll horizontally in this small window.

And this illustrates the entire point of this blog post: the MCP protocol needs a way for the server to provide UI components. In this case, I would like to show the ticket info in a sort of card element, with a button that brings you to the ticket, and perhaps a text input box that has the comment which can be edited before submitting, in case the AI got something wrong.

Since most LLM applications are chat boxes, and UI elements could be optional, this should be feasible.

Conclusion

As my expertise lays more in AI infra and backend, I won't be the one proposing a framework. Instead, I'd like to simply put the idea out there. I'm curious if others have thought about this, and if there are any tools/frameworks in the works that are relevant.

Vercel's AI sdk comes to mind, but it's not quite it.

As AI models start hitting their limits, and different model providers seem to be converging in quality, I think UI will become a big differentiator in products.

I'm curious to see where this all goes. If you enjoyed this post, please connect with me on X.

P.S. I'm planning on moving to Substack, so subscribe for future posts!