From Hype to Reality: What It Really Means to Be a GenAI Engineer

AI Hype

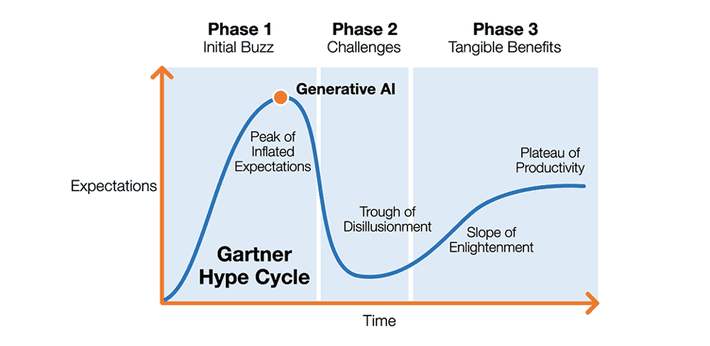

These days, there's a lot of hype around AI. There's a lot of talk about Gen AI, specifically with the advent of LLMs and image, audio and video generation models. The use of 'generative ai' in job titles has seen growth that reminds me of the ‘big data’ days.

With all this hype, job titles and new terms comes a lot of confusion. This blog post aims to help clear up at least some of the confusion, by talking about my experience as a GenAI Engineer, and what the role means to me.

How I got here

I like to think I've had a pretty interesting career, so far - I started at Google fresh out of college, then worked at a small AI startup under a twenty-something college dropout CEO (much love, Ben), and now I'm at an ad tech startup called Liftoff Mobile. My role has evolved from Software Engineer to Backend Engineer to Generative AI Engineer. Along the way, I've experienced the full spectrum of company sizes—from a massive corporation (100,000+ people) to a tiny startup (fewer than 20 people) to a mid-sized company (around 700 people).

At Google, I witnessed firsthand how AI transformed the company. When I joined in 2016, Google made a pivotal shift in strategy from "mobile first" to "AI first". I had the opportunity to work across several teams, each leveraging AI in distinct ways:

- On the Payment Fraud team, I built a feature store backend service that helped AI models detect fraudulent payments by analyzing signals like credit card entry time, language settings, and IP addresses.

- On the Google Assistant team, I worked with codebases powering both Search and Assistant, seeing how they used Natural Language Processing and decision trees to process user queries.

- On the YouTube Music team, I worked with recommendation systems to match users with new videos they might enjoy.

At WOMBO, I got much closer to the AI action. Though hired as a Backend Engineer, the small company size let me dive deep into AI projects. We stayed on the cutting edge by studying new research papers, productionizing open source models, and developing our own implementations. I worked extensively with image generation models like Stable Diffusion, scaling them to handle over 5 million monthly users and fine-tuning them for our needs. I also fine-tuned one of the first-ever video generation models and deployed it through our Discord bot. When GPT-3's APIs were first released, I began experimenting with LLMs as well.

At the time of this writing, I am a now Generative AI Engineer at Liftoff Mobile. I work on projects that involve using Generative AI technologies to improve workflows across the company, such as using LLMs in slack bots, or using image generation for ad creatives.

GenAI vs AI

Let's explore what GenAI technologies are and how they differ from traditional AI technologies.

GenAI represents a new subset of AI that focuses on models that are both generative and more versatile in nature. At its core are Large Language Models (LLMs), which excel at diverse tasks like generating summaries, creating structured text (such as JSON or CSV files), and classifying documents. It also includes image generation models such as Stable Diffusion and Dall-e, as well as video and audio generation models.

More comprehensively, I would say that GenAI technologies include:

- LLMs and multimodal LLMs

- These include ChatGPT, Gemini, etc. They take text, image or audio as input, and return text as output.

- Image Generation Models

- For example: stable diffusion, dall-e, midjourney.

- They usually take a text prompt as input, and return an image.

- Some of the models use more complex inputs, like reference style images, human pose, etc.

- Video Generation Models

- These are the same as image generation models, but the output is a series of images that create a cohesive video.

- They also include other video models, like lip syncing models,

- Audio Generation Models

- These include text to speech, music generation, sound effect generation.

- Deepgram and Elevenlabs are leaders in text to speech

- My blog post dives deeper into audio generation models: On Music Generation AI Models

A key distinction between a traditional AI/ML engineer and a GenAI engineer lies in their focus: while ML engineers create model architectures and train models, GenAI engineers specialize in implementing these models for practical, production-level applications.

Example Projects

Here are some example projects I've worked on:

- Using LLMs to create a Slack bot that uses RAG to make company knowledge more accessible to employees

- Building a support bot to help app developers implement Liftoff's ad SDK

- Leveraging LLMs to enhance the sales process

- Implementing image and video generation models for ad creative production

- Applying multimodal models for ad creative curation (including image defect detection using GPT-4V)

This list only scratches the surface. GenAI technologies have wide-reaching applications across many domains. While some use cases, like improving sales processes or knowledge management, are valuable for virtually any company, others—like ad creative generation—are specific to industries like ad tech.

A Couple Things to Note

The more time I spend working in this field, the more I realize that the hype has created a lot of confusion. Here are a couple things that I think are important to point out!

1. AI Agents Can Do Anything

Many people believe AI can accomplish anything if you simply ask it to. While AI capabilities have expanded significantly, much of what appears to be "magic" is actually careful engineering. Engineers must meticulously consider edge cases and test system limitations. When things seem to "just work," it's typically the result of countless hours of engineering and QA ensuring every edge case performs correctly. What appears to be a simple chatbot often comprises thousands of lines of code, multiple LLM prompts, backend calls, and complex logic—all working together to create the illusion of an autonomous agent.

2. Please Stop Converting Bullet Points to Longer Text

I've noticed a trend where people feed bullet points into LLMs (see the meme) to generate emails, design documents, and other written materials. This is a pet peeve of mine because, inevitably, the end user converts the expanded text back into bullet points. The LLM expansion serves only to create an illusion of more work being done.

Here's the crucial point: LLMs aren't good at adding genuine information to your input text. Asking them to do so risks introducing false or irrelevant information. Instead, LLMs excel at editing—improving readability, fixing grammar, and adjusting tone. They're fantastic editors but poor writers, largely because they lack their own real-world experience.

Important Technologies that I use

Finally, I want to finish with a list of important technologies that I use, as a generative ai engineer. This list is by no means exhaustive, and I might write a longer post about these in the future.

- OpenAI's GPT API

- gpt4o-mini: this is probably the model I use the most. It’s fast, and really cheap for how good it is. I use it to generate structured output. For example, I created this crossword generator, which includes both traditional hints and 'emoji' hints: web app here. I used 4o mini to generate a structured output that includes both a 'emoji hint (a hint made out of emojis, exclusively), and a regular hint.

- The great thing about structured output is that we can validate results using traditional programming. For example, for the emoji hint, we can validate that the string is purely emojis, and not regular text. If it’s wrong, we can call gpt4o mini again to try again.

- However, there are limitations. For more complex reasoning or structured output, I'd recommend using gpt4o.

- gpt4o-mini: this is probably the model I use the most. It’s fast, and really cheap for how good it is. I use it to generate structured output. For example, I created this crossword generator, which includes both traditional hints and 'emoji' hints: web app here. I used 4o mini to generate a structured output that includes both a 'emoji hint (a hint made out of emojis, exclusively), and a regular hint.

- Instructor

- This is one of my favorite python libraries when working with LLMs. It improves structured output by allowing you to define data classes using pydantic (or zod if using typescript), and passing those classes to the LLM call.

- It makes prompt engineering closer to real engineering. In addition to writing prompts to guide the LLM, you write data classes, and you annotate the data class with comments. This data class is automatically converted to a prompt, and the instructor library automatically handles retries for you, which allows you to simply define a class, call the LLM and the rest of your code can safely assume that the result adheres to the data class.

- Gemini

- Gemini introduces a few new LLM models that handle longer context than any other LLM, and so it's really useful to use when you have long context. It can also be used with the openai API.

- For example, I used Gemini to help me read the Texas Property Code, when I had questions about renting a property.

- Openai cannot fit the entire code into it's context window, and so it gets questions wrong.

- Gemini on the other hand, can fit the entire code in memory, and can therefore answer questions in a much better way.

- LLM Tracing: Langsmith, Lunary, Weave, etc

- Tracing is really useful for debugging - when something goes wrong, it's useful to see the inputs and outputs of every step of the program's flow. This is true for traditional engineering, as well as GenAI engineering.

- The only difference with LLMs, is that there are tools that make it easy to view data such as tokens used, cost of each model call, prompt input and output, etc.

- These tools are really easy to use, and can save hours of debugging. Some of them are literally less than 5 lines of code to implement.

- Replicate and Fal

- These two websites provide easy access to a wide range of GenAI models - from image gen to audio gen, to LLMs

- They make it really easy to create prototypes, without worrying about GPU infrastructure.

Conclusion

Being a GenAI Engineer is about bridging the gap between cutting-edge AI technologies and practical business applications. While the field is often surrounded by hype and misconceptions, the reality involves careful engineering, thorough testing, and a deep understanding of both technical limitations and business needs.

Success in this role requires a combination of traditional software engineering skills, an understanding of AI capabilities and limitations, and the ability to identify genuine opportunities for AI implementation. It's not about chasing the latest AI trend, but about creating reliable, scalable solutions that provide real value.

As the field continues to evolve rapidly, staying informed about new developments while maintaining a practical, engineering-focused approach will be crucial. The most effective GenAI engineers will be those who can separate the facts from the fiction and deliver solutions that make a meaningful impact.

Thank you for reading! And before you ask, no, an LLM did not write this blog post. It did, however, help proofread.

P.S, My team is hiring! If you're interested in joining, please reach out to me.

P.S. I'm planning on moving to Substack, so subscribe for future posts!